The next level in AI

Isn’t it curious that, in the realm of artificial intelligence, the most difficult tasks for AI researchers to model, turn out to be the easiest tasks for humans to perform?

This paradox has frustrated AI researchers for decades, partly because of our limited understanding of human intelligence, but also from a growing disparity between the academic focus on machine learning, and the technological implementation of human thinking.

Many people consider the game of chess, and its eastern relative, the game of Go, to represent singular examples of cognitive difficulty for humans, and yet both of these games were conquered by machine systems before even the most primitive of biological behaviors (such as vision and image comprehension, or just innate cognitive planning and problem-solving abilities) have been demonstrated in a machine form.

Contemporary AI researchers will proudly proclaim that the machine mastery of chess and Go demonstrates that machines can indeed learn, but can they think?

The majority of today’s “AI” is based on decade’s old connectionist technologies which reduced the biological networks that they were modeled after, (which change over time), to nets that have fixed structure.

So a machine implementation of human thinking can only come about with a return to Nature’s marvelous neural creations, and an understanding of their complex behaviors. The latent inability of today’s connectionist technology to model the real world demonstrates that a design for an AI model which can actually think cannot even be contemplated before a thorough understanding of natural intelligence has been brought into the conversation.

Thinking means going beyond mere learning, it means integrating learned representations into the entire fabric of what has been learned previously, in a way advantageous to an organisms’ survival.

But the AI of BigTech and the designs in academia cannot model this behavior in any sense of the word. Nor does contemporary machine learning produce representations at any level that can be integrated into larger systems without great difficulty.

There is, however, one design that is setting the boundaries for machine thinking by demonstrating the behaviors that Nature evolved for natural intelligence:

The Organon Sutra. see why the Organon Sutra is different than the AI from BigTech

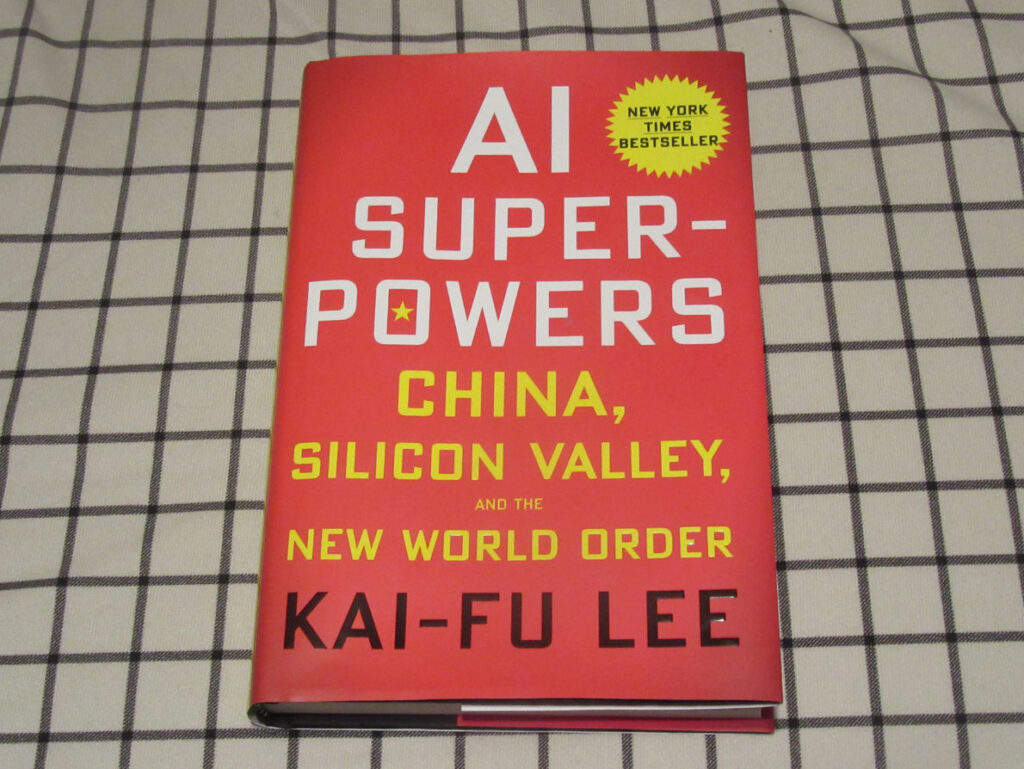

In a compelling book titled “AI Super-Powers: China, Silicon Valley, and the new world order”, Dr. Kai-Fu Lee has framed the conversation on contemporary AI in a new light, arguing that AI leadership equates to leadership in all of technology, with consequential implications for a new world order.

And yet, Dr. Lee suggests a competition between an East and West, with both competitors struggling to squeeze out an advantage using essentially the same, decades old technology.

Regardless of his contention that a dominating form of this connectionist technology can be achieved, the First Frontier Project is changing the conversation by demonstrating that an entirely unique technology can prove to be the enduring solution to this competition, and is presenting Dr. Lee with an open challenge: Can “Deep Learning” systems actually think?

In their zeal to develop the artificial neural network architecture to their fullest potential, BigTech and academia have been so collectively focused on a data-driven approach to “deep learning” that they have overlooked the fundamental constraints of their technology, fundamental behaviors which Nature solved hundreds of millions of years ago, and behaviors which must form the foundation for any machine technology which might strive to “reason” about its world.

So the First Frontier Project would like to offer Dr. Lee with a channel to address this open challenge, and reply through this contact page: Contact-page link

Logic only allows us to reason about that which we know of. But, because it is based on a functional mathematics and the axiomatics of symbolic logic, the narrow AI of today’s BigTech and academia cannot even begin to reason about a world full of unknowns, and will never come close to demonstrating the emergent behaviors of natural intelligence.

And this has been seen in the resounding failure of the neural network “machine learning” model to successfully demonstrate a functional self-driving car technology, the result of that fundamental constraint which prevents the machine learning architecture from being scaled up into any form which can address real world problems.

A fundamental constraint which has been solved by the Organon Sutra, a machine design which can be scaled up to tackle the unknowns of the world. Because it has what the “machine learning” systems of Big Tech doesn’t have, the technology from the First Frontier Project can develop a new AI-enabled trillion-dollar online industry. Stay tuned.

See the Organon Sutra’s exposition of natural intelligence

The first AI to think? (As opposed to the industry wide paradigm of an intelligent algorithm which merely computes…) you can change the conversation here